ChatGPT, Please Take My Job!

AI Won't Cause Permanent Unemployment—But It Will Disrupt Labor Markets for the Better

Thanks for reading! If you haven’t subscribed, please click the button below:

By subscribing you’ll join over 24,000 people who read Apricitas weekly!

Otherwise, liking or sharing is the best way to support my work. Thank you!

The Text of This Article is 100% Human Generated

The last few months have been a whirlwind for commercially available artificial intelligence. Image generation programs like DALL-E 2 and Stable Diffusion have stolen headlines for creating art that is nearly on par with experienced human artists. After the wildly successful launch of ChatGPT, OpenAI’s text-based AI chatbot, major companies like Google and Microsoft are racing to integrate AI into their product suites. AI startups are raising millions of dollars on burgeoning hopes for the industry—hopes that AI will be able to revolutionize law, finance, medicine, business administration, content generation, transportation, and so much more.

Lots of artists, lawyers, doctors, and truck drivers are rationally nervous about what this means for their jobs.

These AIs are undoubtedly impressive and represent a massive leap forward for the industry and an acceleration of AI technological development. Still, they largely remain “narrow”—they are good at one specific set of tasks (creating images, generating text)—but are fundamentally unable to do anything outside the fields they were designed for. Although the definition of “narrow” is clearly widening, with programs like ChatGPT able to approach tasks like research, poetry writing, coding, and more—these programs individually remain unable to perform anything close to the range of tasks of a normal human. Try as you may, you’ll never get ChatGPT to drive a car—so the jobs of truck drivers remain safe for the moment.

The hope—and fear—is that computers will one day approach Artificial General Intelligence (AGI), matching the cognitive capabilities of humans and being able to ingest the massive range of informational inputs and perform the wide range of tasks that a normal human being can. An AGI could solve complex problems with little to no direct instruction, could make independent choices and decisions, could research and develop new information, and could manage a variety of complex physical tasks in real-time—all as well or better than a human could. At that point AGI, the worried say, would lead to millions of workers being permanently replaced by computers with no ability to qualify for other jobs, leading to a massive permanent rise in global unemployment.

There’s a big debate about whether AGI is actually possible (or moral, or a good idea) but I want to put that all aside for a moment. For the sake of argument, imagine that:

Computers will soon match—and exceed—the mental abilities of human beings across the board. In the same way that no human player can beat the best chess AIs, no human being will be smarter than the best AGIs.

Deployment of AGIs will be ubiquitous and unconstrained by software limitations—in the same way that the vast majority of Americans have access to smartphones and web browsers today, the vast majority of Americans will have access to AGIs in the future.

Efforts to legally safeguard employment in the face of AGI will all fail—no laws will be passed to ensure that, for example, your driver, lawyer, cook, doctor, or financial advisor must be a human being. AGIs and machines will be legally interchangeable with human workers across all occupations and sectors.

Even in this scenario, which I have intentionally made as extreme as possible, I believe global human employment would rise, not fall, with the development of AGI—as it has with all innovations and automation beforehand. There would be a massive reallocation of workers across sectors and companies, a destabilization of many industries, and a host of short-term losers, but on net, the effects would be extremely positive for economic output, growth, and employment.

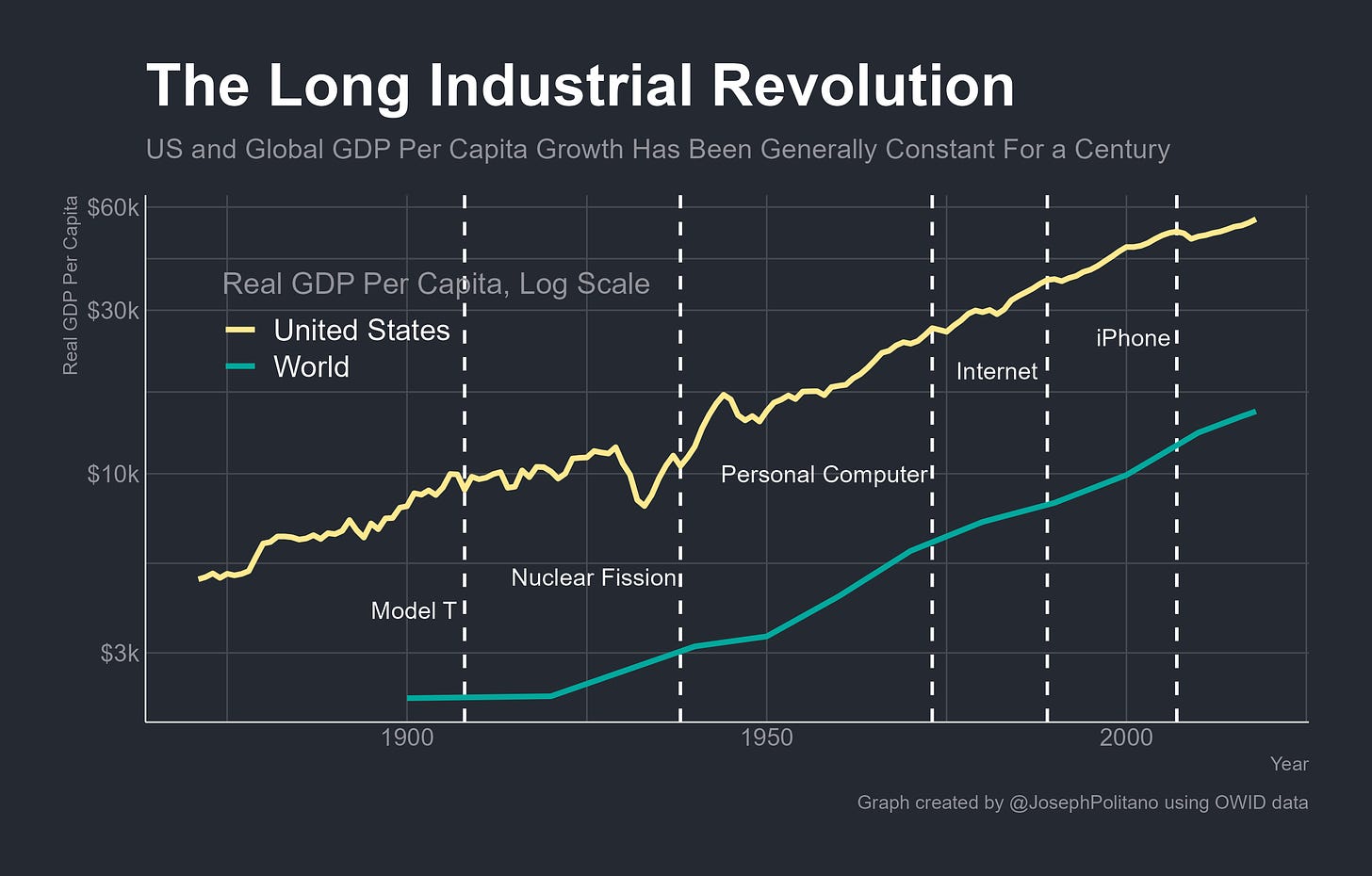

But I also want to constrain and contextualize the hype a little bit. The innovations in AI are tremendous and exciting, but I don’t yet believe they represent “the next industrial revolution” so much as the continuation of innovation and automation at the same pace it’s held since the start of the second industrial revolution in the 1870s. The car, the airplane, nuclear fission, the fax machine, the computer, the internet, smartphones—none of these technologies represented a permanent rise in the growth rate of global productivity, just further steps on the relatively constant rate of productivity growth that began just after America’s civil war.

From the bottom of my heart, I truly hope this time is different and that AI unlocks the next tier of productivity growth. America’s economy, like many in the world, has wallowed in an era of relative stagnation that began after the 2008 recession—and that stagnation is a large part of the reason why for the first time in modern history nearly half of Americans don’t earn more than their parents. We will face global climate, demographic, healthcare, and poverty challenges that would all be made much easier to solve if AI truly did bump us into the next tier of economic growth in the way its proponents hope. So even if I don’t buy all the hype I’m still rooting for the robots—a world where AI truly is truly a disruptive and revolutionary economic technology is one that is good for workers and good for people.

Humans Are Not Horses

The general argument for AI-driven systemic unemployment is something like this:

Sure, prior bouts of automation increased productivity without permanently boosting unemployment (and in fact increased employment by lifting women from household production to market production). But no previous innovation has ever categorically exceeded human capabilities in the way AGI will.

Think about horses—for thousands of years, innovations by humans increased the number of horses “employed” in transportation, communication, agriculture, and other industries. But when automobiles came around and categorically exceeded horses’ capabilities, the economy moved on quickly. Now, the only horses that remain “employed” are in circuses or racetracks.

Humans, however, aren’t like horses, for a few key reasons. The first is that the economy is just a social construct to produce the goods and services that humans deem valuable. Oil is just a chemical compound that sits under the ground, the only reason it is valuable is that humans use it to power cars, drive trucks, fly planes, and so on—and the only reason those vehicles are valuable is that humans find value in traveling and transporting goods. Art, music, games, and movies are just noises, lights, and pictures that people consume because they derive enjoyment from them. Second, humans are autonomous beings that demand items and independently produce items for their own benefit—left on their own, the majority of horses would just frolic in fields. The majority of humans are independently driven to produce far, far beyond what is necessary for basic survival.

These points are key—the economy is just a social construct to produce what humans find valuable, and humans autonomously choose to work when it benefits them. The third key point is that there is no upper limit to the amount of value humans can derive from the economy. The people reading this are statistically likely to be high-income American workers, among the richest people in history—if they wanted to, they could work 5-10 hours per week and still take home 2-3x more than the current median global income, which is many times higher than historical global income levels. They choose to keep working because they derive benefits from the extra income that affords them high-end cars, large houses, advanced medical care, international vacations, and more.

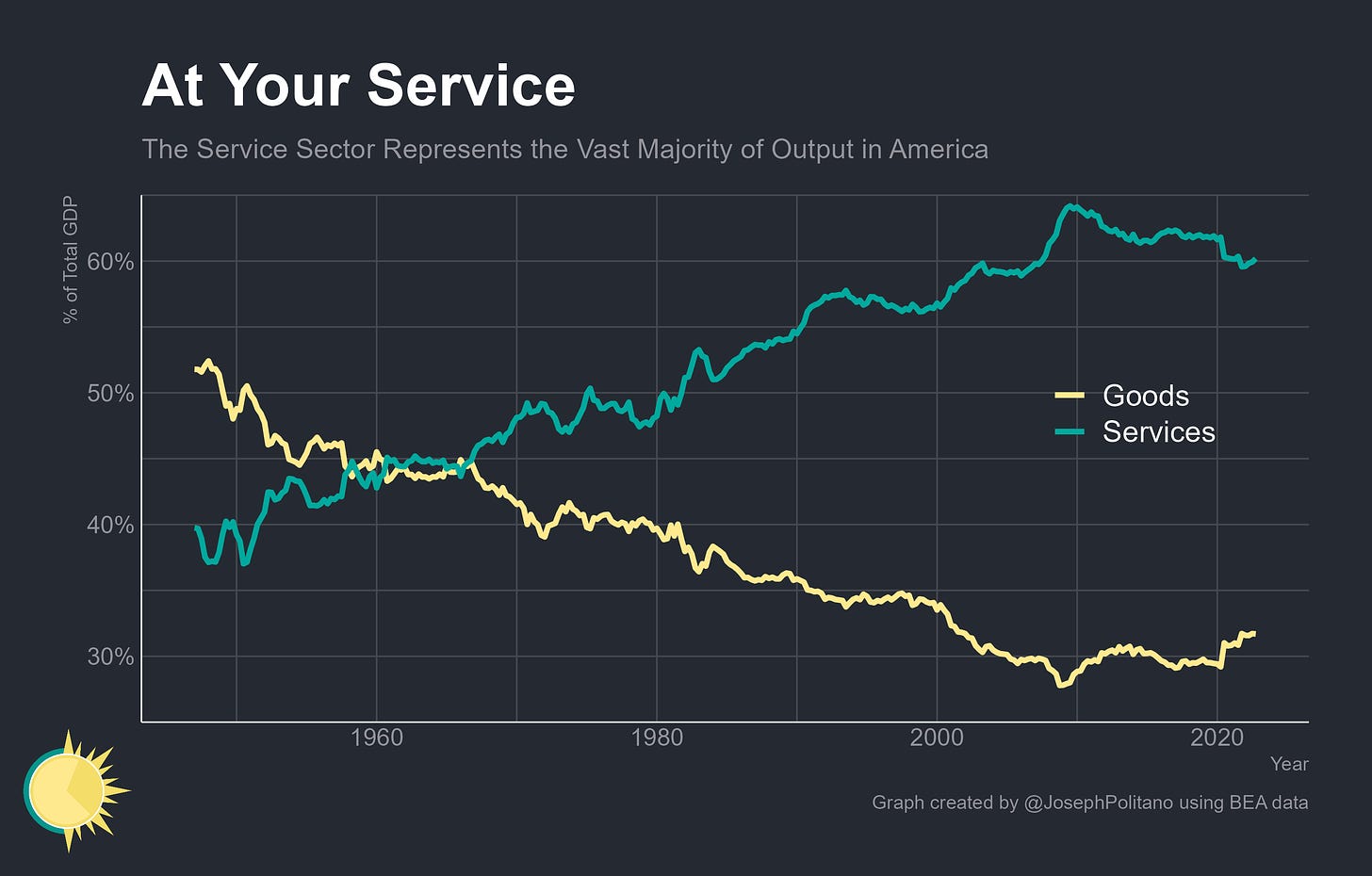

To be fair, there are physical limits to goods demand that currently bind—it would be impossible for every person to own 20 planes based on the amount of raw resources currently on earth (and space mining remains future tech). More importantly, at some point, most people decide they’ve had enough and the value of having more stuff is literally not worth the space they take up. However, there is no constraint on the amount of services people can demand. Medical services are a great example—short of the invention of immortality, people have unlimited capacity to demand more personalized medical care that can elongate and raise the quality of their life. What all of that means is that functionally there is no limit to the amount of valuable services that can be performed for humans. Even despite the pandemic, which supercharged demand for goods, Americans currently spend twice as much on services as on goods—and have one of the highest service shares of GDP in the world.

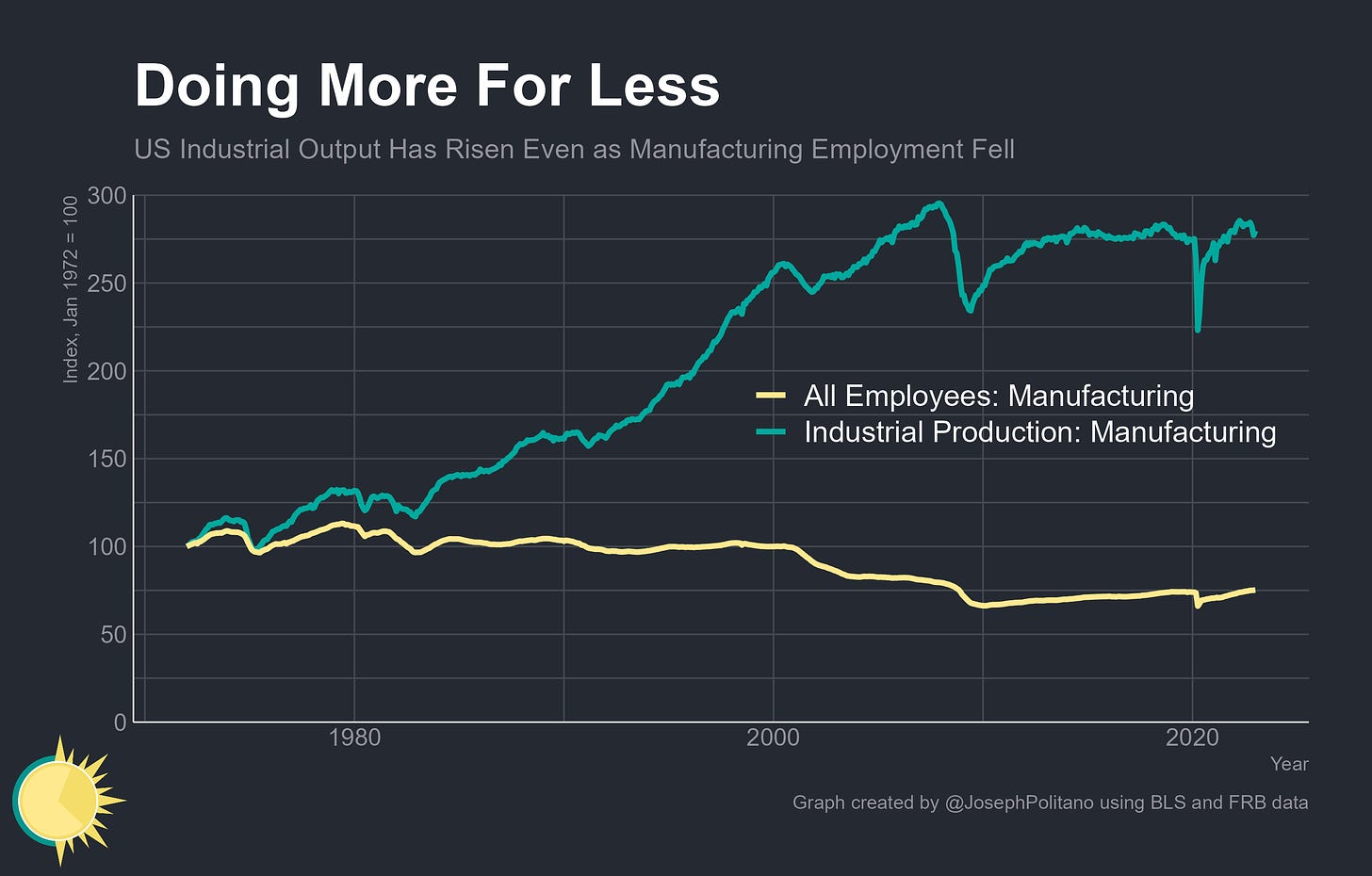

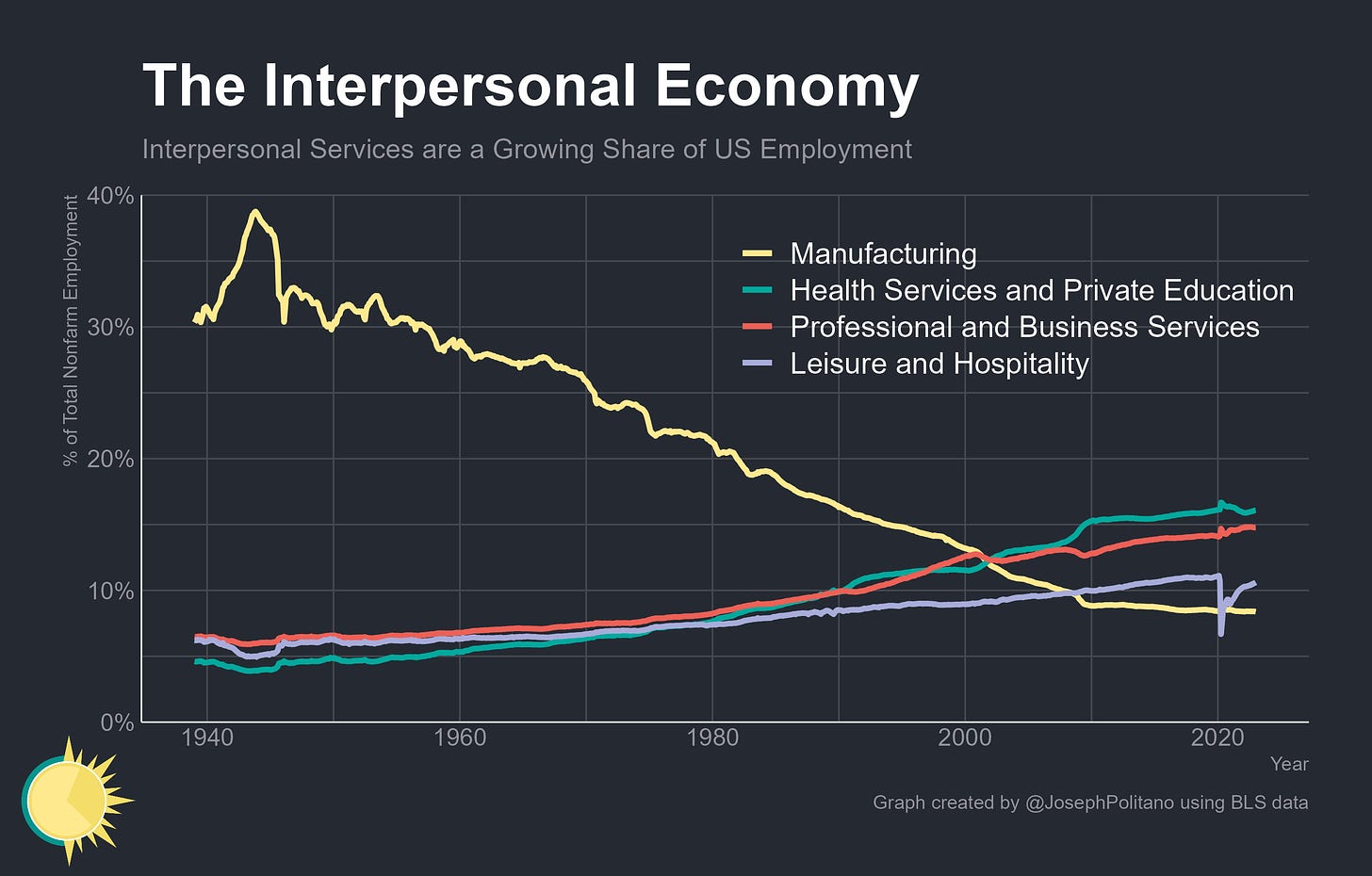

It’s also worth remembering that machines already have technologically exceeded human capabilities across a number of dimensions without causing structural unemployment. Try benching the 4,000lbs that a forklift can pick up if you want, it won’t end well. The key industries most subject to physical automation, like manufacturing, have seen steep declines in employment even as output massively increased.

However, and this is key, aggregate employment rose dramatically even as manufacturing employment fell. That was propelled by the rapid increase in service-sector employment—cooks, teachers doctors, computer technicians, retail workers, lawyers, accountants, etc—from just about 60% of workers at the end of World War II to more than 90% of workers today.

Automation, in this case, did not eliminate the need for work but rather increased the complexity and quantity of production while changing the comparative advantages of human labor and machine “labor.” In other words, productivity growth does not reduce employment—it increases consumption and allows workers to move into more productive tasks, without eliminating jobs in aggregate. As a mental model, you can imagine it as a logistics supply chain—Automation of clothing manufacturing will increase demand for semiconductors, semiconductor manufacturers will need more designers, and those designers will need teachers, professors, and daycare providers to achieve their work, so employment in the service sector rises to support and enable advanced production in the manufacturing sector. As the output level and complexity of the economy increases, workers specialize further and further into narrower occupations but don’t stop working—and in fact tend to earn and consume more. Or for a more poignant and current example, automation of image generation will likely enable an increase in the production and consumption of more complex media—movies, video games, VR experiences, etc.

The key point is that some comparative advantage—the difference in relative costs, not absolute costs—must definitionally exist between human and machine labor. Even in a situation where humans are less productive per hour worked than machines across all tasks, it increases economic output for humans and machines to specialize in the areas they are relatively most productive in and “trade” for the same reason it makes sense for countries or people who are less productive across the board to still work and trade with those who have an absolute advantage. This principle extends to plenty of cognitive tasks as well—indeed, computers can already perform math, long-distance communication, content recommendation, data processing, information storage, reading, searching, and a wide range of other tasks all faster and better than any human ever could—and that has not caused a rise in unemployment.

In the Future, Your Job Could Be…

Since comparative advantage is definitionally always present, there will always be gains to “trade” between humans and machines, and companies/workers will continue to seek out opportunities to take advantage of that comparative advantage, society will continue to find places for humans to work no matter how good machines get. But where specifically will humanity’s relative advantage be if AGI overtakes our cognitive abilities?

That’s a hard question to answer, but I will give a few points. First, think about Moravec's paradox—what humans would call “higher reasoning” actually requires far less computational power than the sensory, motor, and perception skills that most able-bodied people take for granted. Generating text just requires inputting and outputting computer-readable information, but walking requires collecting a wide variety of visual, audio, and sensory inputs while operating complex muscle/machinery in real-time. Boston Dynamics, well-known for their dog and humanoid robots, have actually used little to no AI in many of their machines. Given the power, battery, mobility, and computational requirements of operating AGI-driven machines, it’s likely that human beings would retain comparative advantages in a range of non-repetitive physical tasks that require dextrous movement—think medical assistance, construction and building trades, information technology services, elements of food service, etc.

On a related note, a lot of human work in a hypothetical AGI future would be solving “last mile” problems, both physically and metaphorically. The last mile problem is about travel and parcel delivery, with the general idea being that getting products to their final destination is much more difficult than getting it 90% of the way there (e.g., flying a package from LAX to JFK airport is easy, delivering it to a front door in New Jersey is hard). In a situation where road transportation is automated, AGI drivers could take workers to houses where they just run the packages up to front doors, or AGI cooks could make food that’s served by human waiters. The built environment of our society, like much of our economy, is designed by humans for humans’ utility, and so humans will have unique comparative advantages in accessing large parts of it.

Think about the cognitive “last mile” too—AGI doctors could create perfectly tailored treatments and prescriptions, but those would be meaningless if patients could not be convinced to follow them. In an AGI future, a lot of human labor would be constituted in interpreting and interfacing between machines and humans—taking the readouts from programs and bringing them into the real world to help real people. At a basic level that’s not an especially radical proposition—interfacing between computer data and real people is the basic function of my job (and the jobs of many white-collar workers!) But taken seriously it’s an essential part of functional AGI implementation—humans will have likely comparative advantages in the physical implementation of many AGI decisions, communicating with other humans in physical space, training and guiding AGIs, and correcting for nonhuman errors.

But also, humans are naturally going to retain a comparative advantage in any interpersonal sector where people are an essential part of the services demanded. We are naturally social beings, we derive immense utility from the presence, assistance, and camaraderie of other people. In sectors like education, healthcare, childcare, leisure and hospitality, arts, entertainment, and more, humanity itself is a core function of the output delivered—and I would expect consumption in these sectors to continue their long-run growth in an AGI future.

On the converse, AGI is likely to have a significant comparative advantage whenever services can be delivered at scale, where inputs and outputs are easily machine-readable, at cognitive tasks that are beyond the realm of human intelligence, and in tasks where human limitations (needing to sleep and eat, not wanting to die) are significant drawbacks. Think research, transportation, mathematics, software development, content generation & recommendation, highly risky physical tasks, interfacing with machines and computers, and much more.

However, keep in mind that this is not a black-and-white human vs AGI line but an extremely heterogeneous mix—talking about “all the tasks an AGI could do” is just as nonsensical as trying to list off all the tasks a human could do, and efficiency demands that there will certainly be intense labor specialization among AGIs in the same way there currently is among humans. It’s likely that many tasks that look ripe for automation (business services, research, software development) will gain both human and AGI employment as they continue to become more important sectors of the economy while some areas (publishing, content generation, manufacturing, etc) continue to lose human workers. But we should be humble and acknowledge that this is all extremely difficult to predict ex-ante—ten years ago, the growing concern was that self-driving cars and warehouse automation would put millions of truck drivers and fulfillment workers out of a job. Instead, employment in those sectors has shot to record highs—with the industries complaining about ongoing shortages of workers.

But I stand by this statement: in a world of AGI, global human employment would rise, not fall. That’s because AGI would be a widely deployable computer technology like smartphones and the internet—the kind of technology that is able to penetrate rapidly, even into countries with comparatively low income levels. The resulting rise in global productivity, as in previous technological deployments, would help increase economic output and create market employment opportunities in low-income countries, especially for women. Even in countries with already-high employment rates, the disruption from AGI would be a net positive for workers on the whole—increasing their abilities, pulling them into more productive jobs, raising their pay, and affording them more and better goods and services. The loss of jobs in some sectors would be more than made up for by the wide range and higher quantity of better employment opportunities that open up.

Being Realistic About AI

Coming down a moment from the theoretical, I want to be realistic about AI, AGI timelines, and their combined economic potential. Proponents (especially those with something to sell) like to say that AGI is just around the corner—and that AI developments represent a transformative change on the scale of the industrial revolution rather than just the continued development of computer capabilities. I’ve seen forecasts ranging for anything from 8% to 50% annual global GDP growth as a result of AI and AGI development, which is totally out of step with what we currently know about AI capabilities and what financial markets are currently saying.

For one, even when computational capabilities start approaching AGI it will still just be comparable to human intelligence—of which there are already more than 8 billion on the planet. The first true AGI would be distributed, scalable, and able to easily interface with computers, all of which would be major advances, but it would still just be a computer with human intelligence.

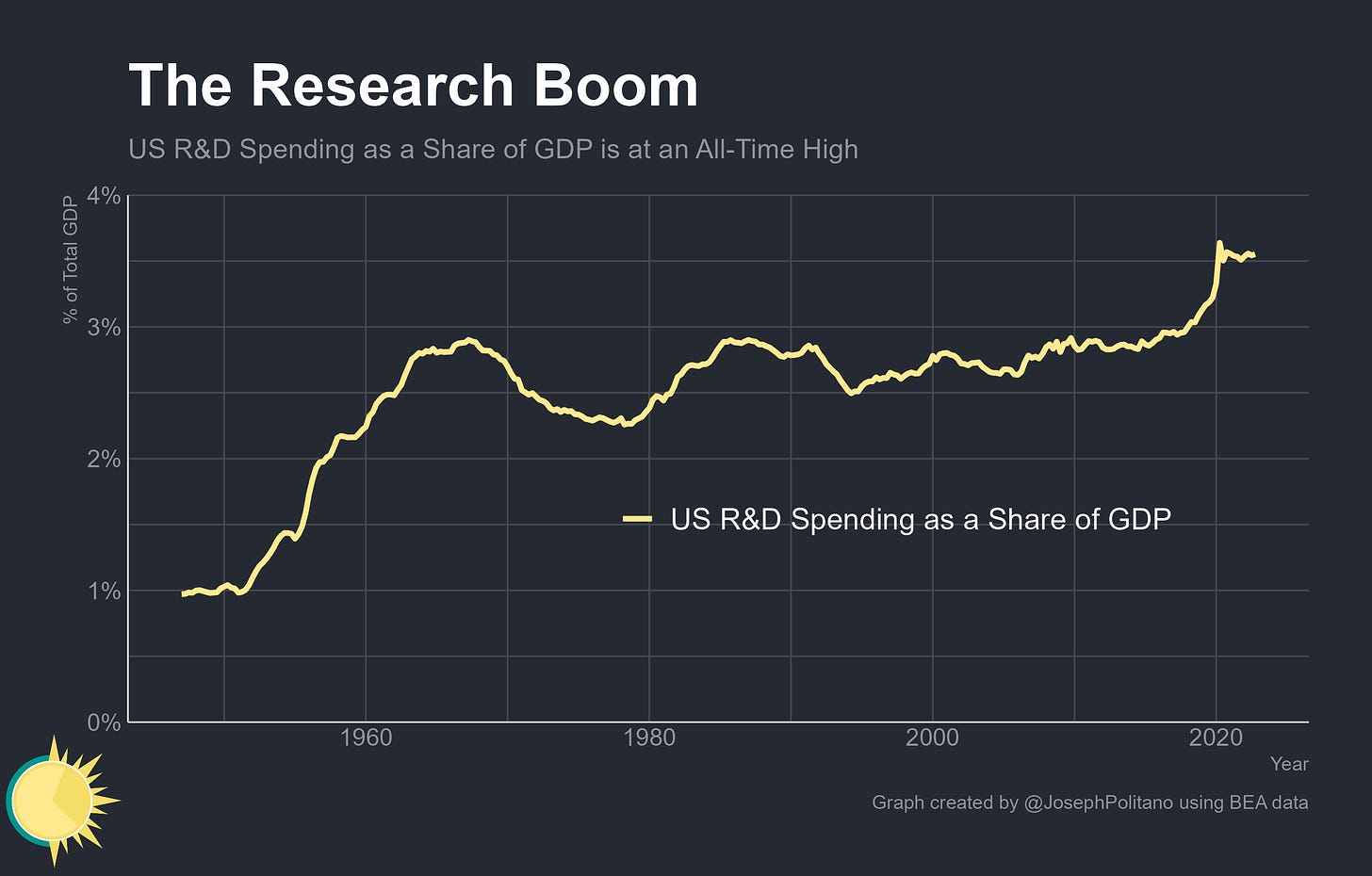

Plus, most of long-run economic growth comes from the creation and development of new ideas—which is problematic given that new ideas require more and more resources to develop as the economy gets more complex. Neither the rising share of global GDP going to research and development nor the millions more researchers working across the world today have been able to raise productivity growth in high-income countries—it’s not obvious why AI should be able to do what millions across the world couldn’t.

That doesn’t mean AI won’t be transformative—the internet was transformative, and it didn’t cause a permanent rise in global productivity growth! It’s just that even 8% productivity growth is almost unfathomably high for a modern high-income nation. Even the fastest growing non-petrostates in the world—which are mostly low and middle-income nations whose growth is driven by the deployment of existing technologies rather than the development of new ones—can barely notch that level of growth. To contextualize, 8.5% annual growth would mean the global economy would be nearly 3x larger than current consensus forecasts by 2040.

More broadly, if markets truly believed that AGI would unlock anything approaching 8-50% annual GDP growth in the near future then you would expect absolutely insane rises in global share indices and real interest rates. In reality, major share indices are down significantly from 2021, as are the majority of the tech companies that should benefit most from AI developments, and real interest rates, while rising, remain at historically low levels. The Austrian century bond, maturing in 2120, yields a meager 2.5% before accounting for inflation.

Right now, we’re still struggling to automate driving, which should be a pretty comparatively rudimentary task for AIs to achieve—Tesla’s first iteration of autopilot launched in 2013 and they still haven’t yet been able to use narrow AI to reach human levels of driving capabilities. Plus, AI output will also be physically constrained by our ability to produce more semiconductors and other computer parts at continually higher quantities and complexities. I don’t doubt that AI will eventually automate tasks like driving or exceed human intelligence, I just still think it’s more likely that AI joins the internet as part of the long line of transformative technologies that, despite changing the world, didn’t create a permanent upward jump in productivity growth.

Don’t Worry About Work

It’s understandable why people would be nervous about permanently losing their job to automation—a job is most people’s most valuable asset in addition to being a core part of their personal identity. But I am not worried about the planet running out of jobs because I am not worried about humanity running out of valuable work to do. Today, billions of people are still reliant on subsistence or smallholder agriculture for the bulk of their income. We face a global climate crisis because of the planet's reliance on fossil fuels to meet most of our energy needs. Aging global populations will require unprecedented levels of healthcare and social assistance. Acute housing shortages are a massive burden to people in most high-income nations. The idea that, amidst all that, we would find ourselves without enough to do is almost laughable. I don't worry about tech causing a persistent lack of valuable work for humans, I worry about our institutions failing to manage the economy in such a way that workers are enabled to do the work that must be done.

Indeed, the recent historical record is one where employment failures have slowed technological progression, not one where technological advancement is damaging the labor market. The persistent slowdown in US productivity growth since the turn of the millennium, and especially since the Great Recession, has been largely related to the macroeconomic failure to prevent and respond to recessions and the resulting decline in employment rates. In other words, the primary barriers to employment have been political and cyclical, not technological—and it is more likely that AI-driven technological growth will help us break out of that low-growth low-employment equilibrium than cause persistent joblessness.

But I do think we should take the concerns of AI-wary people seriously, if only because most of what they worry about are already problems. Worried about the fortunes of laid-off workers? Even in a good year, tens of millions of Americans will involuntarily lose their jobs. Worried about poverty? That’s concentrated among children, the elderly, the young, and people with disabilities—people who are already much more likely to be nonworkers. Worried about inequality? That’s been rising for decades. These are serious problems with legitimate policy implications, but we should evaluate policy interventions by how they would help the economy today, not based on how they might help in the unlikely event of mass technological unemployment.

So I’m still rooting for the robots—the world where they turn out as useful and ubiquitous as proponents hope is one where workers, people, and society are better off. But I also know that realistically they might not be quite as revolutionary as people hope.

Great article! Particularly about the future roles of human labor due to our comparative advantages with respect to AI.

I believe another important job for humans to perform is providing responsibility for decisions, including culpability for bad decisions. Eg, AI can already answer many common medical questions with comparable accuracy to human doctors. [1] Yet patients will demand human responsibility for major medical interventions, and even for the decision to do nothing. That ensures that there is someone to fire, sue or jail for egregiously bad decisions.

In looking at the future of work, I think we can take inspiration from the role of Vice President at financial firms; a relatively junior role that can make up the bulk of the employment for many key divisions. It’s my understanding that this is not just title inflation for the sake of workers and customers but instead a legal necessity. Many actions taken on behalf of a bank can only be performed by an executive.

Even when a firm itself can be culpable, and thereby sued and fined, we humans simply demand a specific person take responsibility for important decisions. With AI bringing massive amounts of information and intelligence to task, our society can now make many more decisions with higher quality. Yet we'll still want a human to blame when things go wrong.

[1] https://arxiv.org/pdf/2212.13138.pdf | summarized in https://twitter.com/emollick/status/1610261628607512576

Love this - very interesting